Photo Courtesy of OpenAI

I began researching the impact of artificial intelligence on students’ creativity and ability to think for themselves in an academic setting by first asking ChatGPT—an AI tool—for some input. ChatGPT ultimately gave me two well-written but notably vague paragraphs weighing the pros and cons of AI use in classrooms. The pro section rather arbitrarily emphasized AI’s ability to “facilitate collaborative projects, offering students opportunities to engage in innovative problem solving and express their creativity in new ways.” At first, I was skeptical of this response as I had never seen ChatGPT used in this way before. Still, upon asking for collaborative project ideas, I was granted an extensive list covering various methods for building engagement and connection across disciplines. Meanwhile, in the cons portion of the assessment, ChatGPT said, “The reliance on AI for tasks such as information retrieval and analysis might lead to a reduced emphasis on traditional research and critical thinking skills.” Such a statement is true. People are far more likely to rely on AI technologies than to take the time and effort to locate credible sources of information. AI should not be chalked up as the enemy of originality, but people, especially students, should use it cautiously. I encourage people to keep an open mind and remain curious about AI and its place in our future.

Some people call it laziness, while others call it savviness; either way, research and the presentation of information have seen drastic changes with the development of AI. The main problem we’re facing with AI today is its egregious abuse by students. AI programs, such as ChatGPT, are specifically appealing as they can produce seemingly eloquent essays on any subject with just a few keywords typed in by the user. With access to all data on the internet, if asked to write an essay on well-recorded, well-analyzed literature such as “1984” or “The Iliad,” ChatGPT will effortlessly generate an essay with the simple command “write an essay about ___.” That said, ChatGPT will have difficulty honing in on specific details of the books as there is so much information on the web about them, leaving the resulting essay—while well written—flat and vague. Similarly, for subjects with very little engagement on the internet, ChatGPT’s analysis tends to use flourished language and perfect grammar to convey empty ideas about the topic.

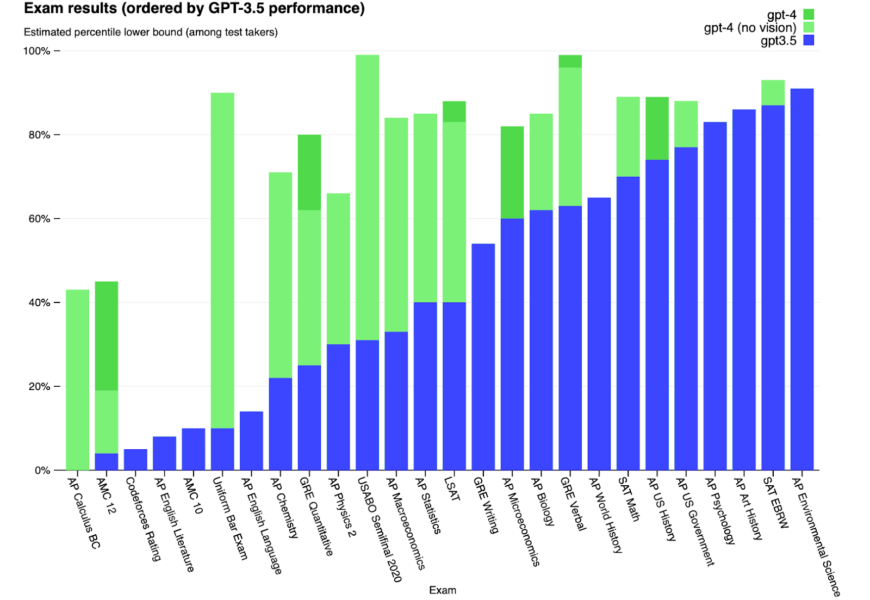

In recent research, ChatGPT-4 tackled various standardized tests. Notably, on AP tests, ChatGTP-4 tended to score very high (between 4 and 5) in history, math, and science courses but remarkably low (2) in English, where there is more subjectivity in writing. Such scores reflect ChatGPT’s ability to regurgitate information but inability to have original or nuanced ideas. When questioned about their opinions on AI’s academic effects, one student who had a teacher encourage the use of ChatGPT for some assignments said, “It’s certainly different from what I’m used to. It’s almost like a teacher telling you to cheat, but I definitely think it is something that could prove helpful once we know how to use it more positively.” Another student said, “I think for kids under the age of 13, it’s a nightmare. Over 13, it can be useful, but the chances of it being used properly with the right intentions are relatively low.” This student pointed out that there’s a big difference between efficiency and laziness, and very few people know how to use ChatGPT for efficiency. “Its gimmick is that it’s advertised as a useful tool, which it can be, but it’s capitalizing on laziness.” Ultimately, students must discover how to use AI to complete tasks efficiently rather than using it as a cheat code.

In comparing quality and effort, it’s logical to claim that the credibility of information decreases as the ease of finding it increases. The less effort we put into research, the less we understand its credibility, and the quicker we are to believe misinformation, especially if it is within our personal biases. Considering AI technologies gather information from all sources of the internet—which covers all extensions of the imperfect, biased human mind—there are some questions of reliability concerning its integrity. While using AI programs (such as ChatGPT) to gather information is far less strenuous than traditional research methods, nothing tells you where the program got its information. If we use AI to simplify the research process, we should use it to help us find credible sources instead of relying on AI as a credible source. AI, while a machine, was created by humans who have sourced all the information generated. AI won’t distinguish between a primary source and a blog unless we tell it to, and that’s where the quality of our research goes down. If we are to learn anything through AI, we must be willing to question its intelligence, as it is based on our own.